Artificial Intelligence

Artificial intelligence is a hotly debated topic these days.

Mainly because of the huge advancements that have been made, e.g. computers are faster, algorithms are smarter, etc.

Rule Based vs Self-learning

Machines started out as rule-based machines. For example, a chess computer would have to be programmed to play chess, and would often just use a "brute force" approach to evaluate as many positions as possible, and then choose the move most likely to result in victory.

Today, chess computers are self-learning machines. They start out with almost no programming, and then continue to teach themselves.

Here are some examples of modern computers that have already beaten the world's best players.

| Organization | Computer | Game |

| IBM | Deep Blue | Chess |

| IBM | Watson | Jeopardy |

| Deep Mind | Go | |

| OpenAI | Bot | Dota 2 |

Beating the world's best players is a huge achievement. Please don't underestimate what was done here.

Today's computers are often trained by letting them play against themselves. They literally play from experience.

Social Robots

With the help of speech recognition, and text to speech software it's now possible to have some kind of conversation with a robot. These robots are often referred to as social robots.

The best known example of a social robot is Sophia from Hanson Robotics.

It's essentially a robot that is connected to a computer using wifi. This means that theoretically speaking the resources she has access to are vast.

Currently she has 62 facial expressions, and started out as just a head, but now she has arms and legs as well. Plug 'n play I guess.

Here's a dramatized version of Sophia's "awakening". It's quite clever actually.

The self-learning aspect of social robots has surprised several programmers already.

Here's a live demonstration:

I suspect that some of the things Sophia says are scripted, but still, it's not bad.

Sophia and her creator Dr. David Hanson have also appeared before the United Nations to talk about the future of artificial intelligence.

For some reason she is now also a citizen in Saudi Arabia.

Movements of robots have improved vastly.

Here's a robot called Mim that can sing and dance:

For a better idea of what robots are capable of check out this video:

Military

I suspect the military is already some 20-30 years ahead. Who knows what sort of tech they have now?

Maybe to understand the military we need to look at what Hollywood is showing us today.

Or something like this where man and machine work together. Like a robot that follows orders.

It might also be a combination of man and robot. For example, some form of remote control, combined with some kind of artificial intelligence that keeps the robot on its feet. That's more or less how Unmanned Aearial Vehicles work today already. Automated killing machines.

You might laugh, but I wouldn't be surprised at all if the military already uses something like Skynet for strategic purposes. Not self-aware of course, but still. Any software errors at that level could be disastrous. A bird mistaken for an incoming missile, etc.

I wonder how many robots the US military has got today? Thousands? Millions?

And what happens when a robot kills a human? Then who is responsible?

Warnings

Some say that self-aware robots are the problem, but even if that never happens self-learning robots could still be a problem. For example, what if a robot concludes that "humans are the problem"? Then what?

People like Elon Musk, and Stephen Hawking have already rung the alarm bell, and have suggested regulations for artificial intelligence, etc.

Maybe a bit like Isaac Asimov's Three Laws of Robotics:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Or RoboCop's prime directives:

- Serve the public trust

- Protect the innocent

- Uphold the law

The fact that Elon Musk, and Stephen Hawking are warning people about artificial intelligence means we should take it seriously.

The Future

I don't know what the future will be of course, but I'm definitely concerned...

Sure, it's not so bad now, but what about ten years from now? What happens when quantum computers hit the scene? Or what if some new kind of algorithm comes along?

Personally I don't think self-aware computers are the problem, but what if self-learning machines start making mistakes, and hurt someone?

Whatever happens I think we'll see a huge increase in man and machines working together.

Take for example a product called Google Home, or Amazon Echo. Both have speech-recognition, and have some control over all the appliances in your home.

- By Daylen / Wikimedia Commons / CC BY 4.0

You just ask it a question, and it answers you. But at the same time, it's always listening... Big brother alert!

Personally I don't believe we'll be able to create self-aware robots for a long time, but then again, robots will always outsmart us when it comes to calculations, and knowledge.

Just think of how Google has evolved over the last couple of years.

Here's what Michio Kaku thinks.

It will also be interesting to see what the impact of this kind of automation is on the job market when robots will do more and more human jobs.

I think that will happen faster than most people anticipate.

Whatever happens, we need to stay alert.

Conclusion

I think that the self-learning aspect of artificial intelligence could be a problem. Mistakes, or unexpected side effects could occur. We must be careful how much responsibility we give to machines, and regulations should be in place.

Let's just hope we don't end up with Skynet.

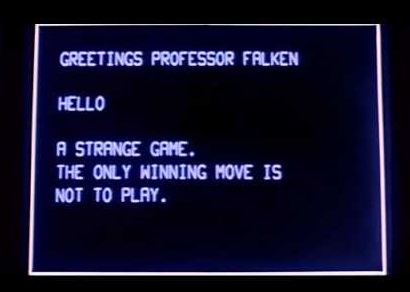

I would prefer the computer in the WarGames movie that figured out that no one wins a nuclear war.

Shall we play a game?